Summarize the Content of the Blog

Key Takeaways

Security teams receive hundreds of alerts daily, with more than half being false positives [1]

64% of security tickets generated per day are not being worked due to alert overload [2]

Risk-Based Alerting can reduce alert volume by 50-90% while increasing alert fidelity [3]

SOAR automation saves analysts thousands of hours by automatically triaging alerts in seconds [4]

Event Sequencing identifies actionable threats by correlating related alerts into single incidents [5]

Data utilization visibility helps identify which sources generate valuable alerts versus noise

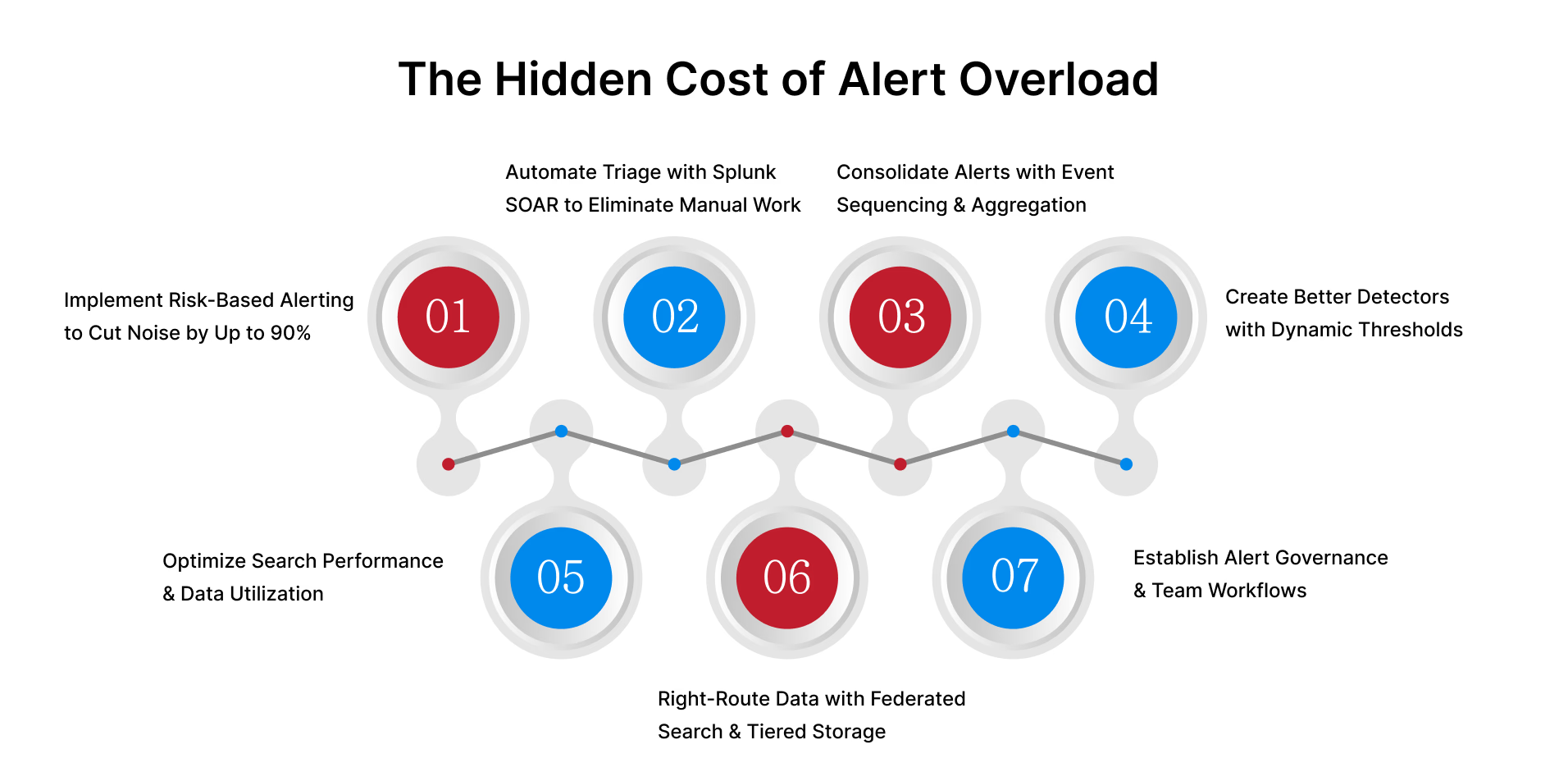

The Hidden Cost of Alert Overload

Your security team starts the day facing hundreds of alerts. By mid-morning, critical notifications are buried under false positives. By afternoon, analyst burnout sets in. If this sounds familiar, you're not alone.

According to a 2022 study, security teams receive hundreds of alerts per day, with more than half being false positives . The problem extends beyond volume: approximately 64% of security tickets generated daily aren't being worked . When everything is marked critical, nothing truly is.

The 2013 Target breach provides a sobering example. Their FireEye security system detected the malware and alerted the security team in Bangalore, who notified Minneapolis headquarters. Yet no action was taken [6]. The alerts were evaluated but deemed not to warrant immediate follow-up. By the time the breach was discovered, 40 million credit card numbers and 70 million customer records had been compromised [7]. The cost? Over $200 million, countless lawsuits, and lasting reputational damage [8].

Splunk alert fatigue isn't just an operational challenge—it's a strategic vulnerability. But it's also solvable. Organizations implementing strategic Splunk optimization approaches have achieved 50-90% alert reductions while improving detection quality. Let's explore how.

1. Implement Risk-Based Alerting to Cut Noise by Up to 90%

Traditional alerting creates a notable event for every detection, flooding analysts with low-level alerts that miss the bigger picture. Risk-Based Alerting (RBA) takes a fundamentally different approach.

How Risk-Based Alerting works:

• Splunk's detection logic provides observations tagged with security metadata like MITRE ATT&CK techniques and risk scores

• Risk scores dynamically adjust based on attributes like privileged user involvement or externally-facing servers

• Notables only fire when enough related observations accumulate in the Risk Index

• Analysts see all related risk events in a single notable with full context for faster investigation

The impact: When Splunk customers use RBA, they see a 50% to 90% reduction in alerting volume, while remaining alerts are higher fidelity and more indicative of true security issues. Instead of investigating dozens of low-confidence detections, your team focuses on correlated, context-rich incidents.

RBA uses the existing Splunk Enterprise Security correlation rule framework to collect interesting events into a single index with shared language. This transforms traditional alerts into observations that correlate into high-fidelity security stories for analysts to investigate [9].

2. Automate Triage with Splunk SOAR to Eliminate Manual Work

If your analysts are manually investigating alerts that automation could handle, you're wasting human intelligence on tasks machines do better. Splunk SOAR alerts automation transforms reactive incident response into proactive threat management.

Key SOAR capabilities:

• Automated Enrichment: Playbooks automatically gather threat intelligence, user context, and asset information before an analyst sees the alert

• False Positive Filtering: SOAR surfaces only high-priority incidents by automatically triaging and dismissing known false positives

• Conditional Logic: Playbooks adapt based on alert severity, asset value, and user risk level

• Visual Workflow Editor: Build automation logic with drag-and-drop interfaces

The business value: Automating security alert triage can save analysts thousands of hours and millions of dollars per year. More importantly, automation scrutinizes alerts within seconds compared to 10 minutes or more per alert if done manually. This results in smarter breach prevention while freeing analysts for mission-critical tasks.

3. Consolidate Alerts with Event Sequencing and Aggregation

Multiple tools generating independent alerts about the same incident creates artificial alert volume. Splunk's Event Sequencing Engine helps you see the forest, not just individual trees.

How Event Sequencing works: The Event Sequencing engine is a series of chained correlation searches. When conditions of all sequenced searches are met, a single comprehensive event containing all alert data is generated.

The advantage: While each individual correlation search might generate false positives, when seen in combination with the start and end searches, you can be confident this is an incident worth investigating [10]. Event Sequencing identifies actionable threats amidst the daily alert noise.

By correlating related alerts into single, comprehensive incidents, your analysts gain the context they need to make faster decisions while dramatically reducing the cognitive load of tracking multiple separate notifications.

4. Create Better Detectors with Dynamic Thresholds

Many alert storms originate from poorly configured detectors. Static thresholds that trigger on arbitrary metrics generate alerts that lack actionable context.

Principles for effective detector design:

• Alert on User Impact: Focus on actual business impact, not just technical metrics [11]

• Require Human Action: Every alert should define a clear, actionable response

• Use ML-Based Dynamic Thresholds: Machine learning establishes per-entity baselines, detecting anomalies without static thresholds

• Leverage Alert Preview: Estimate alert volume based on historical data before deployment

• Include Context: Provide enough information for analysts to begin troubleshooting immediately

Detectors should signal actual emergencies or imminent emergencies. Everything else is noise. Fine-tune alert configurations to filter out routine fluctuations and reduce unnecessary notifications.

5. Optimize Search Performance and Data Utilization

Here's a reality many organizations face: you're paying for massive Splunk environments, but alerts are generated from poorly optimized searches running against underutilized data. This creates a double problem—wasted resources and ineffective alerting.

A Fortune 500 IT company discovered they were operating a 50TB daily Splunk license with 82% of ingested data remaining underutilized. They had over 2,500 searches running without proper metadata, degrading performance while generating alerts of questionable value. The root cause? Lack of visibility into which data sources actually delivered operational value versus those generating noise.

This is where data utilization analysis becomes transformative. Understanding which data sources drive value helps you:

• Improve alert quality by focusing detection on high-value, actively monitored data sources

• Reduce alerts from noisy, low-value data streams

• Optimize search performance by fixing inefficient queries

• Reallocate licenses from underutilized sources to high-priority security data

When you identify which data sources aren't being utilized effectively, you can dramatically improve SOC alert management by reducing false positives and focusing your team's attention on alerts that matter.

6. Right-Route Data with Federated Search and Tiered Storage

Not all data deserves expensive hot storage with active alerting. Organizations often apply uniform retention policies and monitoring approaches across all data sources, even when business requirements vary dramatically.

Smart data routing strategies:

• Federated Search for S3: Route low-value, high-volume data to S3 and search without ingestion when needed

• Ingest Actions: Filter, mask, or route data at the edge using Edge/Ingest Processors

• Right-Sized Retention: Apply appropriate retention periods based on actual business requirements

• Data Quality Issues: Address data quality problems that generate alert noise

When you identify that a small percentage of data sources generate the majority of volume, you gain the intelligence needed to route high-volume, low-value data away from expensive hot storage—reducing both costs and alert noise.

7. Establish Alert Governance and Team Workflows

Technology alone won't solve alert fatigue. According to research, 79% of information security professionals report that their teams are negatively affected by alert fatigue [12]. You need clear processes for alert creation, maintenance, and escalation.

Essential governance practices:

• Alert Lifecycle Management: Require justification for new alerts and periodic review of existing alerts

• Clear Escalation Paths: Define when and how to escalate alerts [13]

• Routing Discipline: Send alerts only to teams with scope to action them

• Runbook Requirements: Every alert should link to clear investigation and remediation procedures

• Regular Training: Keep teams updated on tools, attack techniques, and efficient alert handling

Organizations implementing strong alert governance report not just reduced alert volume, but improved team morale and better incident response outcomes. When analysts trust that alerts are legitimate and actionable, engagement increases dramatically.

8. Measure, Monitor, and Continuously Improve

Splunk optimization isn't a one-time project—it's an ongoing maturity journey. Establish metrics to track progress and identify areas for improvement.

Key metrics to monitor:

• Alert-to-Incident Ratio: What percentage of alerts become actual incidents requiring investigation?

• Mean Time to Detect (MTTD): How quickly does your team identify real threats?

• Mean Time to Respond (MTTR): With better alert quality and automation, response times should decrease

• Alert Volume Trends: Track daily/weekly alert volumes categorized by source, severity, and disposition

• Data Utilization Scores: Monitor which data sources drive value versus those generating noise

Use these metrics to justify continued investment, celebrate wins with leadership, and identify opportunities for further improvement. Organizations that treat alert management as continuous improvement see sustained benefits over time.

Conclusion: From Reactive to Proactive Security Operations

Alert fatigue isn't inevitable. Organizations implementing strategic optimization achieve dramatic reductions in alert volume—often 50-90%—while improving detection quality and analyst effectiveness.

The key is approaching Splunk optimization holistically:

• Better Technology: Risk-Based Alerting, SOAR automation, ML-based detectors

• Smarter Data Management: Data routing, retention optimization, utilization visibility

• Disciplined Processes: Alert governance, measurement, continuous improvement

But optimization starts with visibility. You can't improve what you can't measure. Understanding which data sources drive value, which searches are inefficient, and where opportunities exist for optimization is foundational to success.

How bitsIO Can Help

At bitsIO, we help organizations maximize their Splunk investment through intelligent optimization and AI alert reduction strategies. Our datasensAI solution provides comprehensive visibility into data utilization, helping you identify which sources generate valuable alerts versus noise.

What datasensAI delivers:

• Data Scoring: Algorithms assign scores to each data source based on utilization and knowledge object creation—dashboards, reports, alerts, searches

• High Score Sources: Actively used and optimized data that drives effective alerting

• Low Score Sources: Underutilized data ripe for optimization or routing to lower-cost storage

• Search Intelligence: Identification of inefficient searches with specific performance recommendations

• AI-Driven Recommendations: Insights aligned with MITRE ATT&CK framework for security use cases

• DMX Integration: Leverage Splunk Data Management Extensions to filter noise and enrich high-value data at the edge

Real Results: Our Fortune 500 IT client increased data utilization from 18% to 80% and optimized 2,500+ inefficient searches, dramatically improving alert quality while reducing costs.

Why partner with bitsIO:

• Minimal time commitment: Just 2-4 hours to run assessment reports—no direct access required

• Fast results: Actionable insights delivered in days, not weeks

• Expert support: 50+ certified Splunk consultants with deep expertise in security operations

• Proven track record: Trusted by 300+ enterprise clients across 5 countries

• Splunk-certified: Quality-assured solution seamlessly integrated with your existing Splunk environment

As a trusted global Splunk partner and Inc 5000 company, bitsIO brings proven expertise in Splunk Enterprise Security, SOAR, ITSI, and professional services implementations.

Ready to Transform Your SOC Alert Management?

Stop drowning in alerts. Start with a comprehensive data utilization assessment to identify optimization opportunities and improve alert quality.